YouTube recently announced an artificial intelligence feature that predicts a user’s age based on search history and video preferences. Spotify introduced an automated age check that scans your face and determines your age. Starting to see a trend? Big tech companies are launching a move to protect younger audiences. While one can argue that these new implementations promote digital safety for minors, I believe that this age restriction policy is excessive censorship, as it limits access to valuable educational and news content for students under the age of 18.

In high school, students start to make sense of the world and learn about the serious issues occurring around them; the worst way to hinder this growth is to put barriers limiting their knowledge. As a freshman entering high school, I was already getting exposed to serious issues in daily life, whether through class discussions or social media. The point is, drugs, sexuality, violence and politics are not foreign concepts for us but rather realities that we’re likely going to hear about and witness.

According to ABC News, YouTube Youth director of product management James Besser states that “[YouTube] will only allow users who have been inferred or verified as over 18 to view age-restricted content that may be inappropriate for younger users.”

However, what’s considered “age-restricted” is broadly defined. It’s not always about explicit content. War footage or protests could be age-restricted for “violent content.” Documentaries about sexual violence or human rights abuse could get flagged as “adult content.” The point is, YouTube is a platform where individuals have free access to quality journalism and news coverage. Take that resource away from people, and it contributes to an uninformed public.

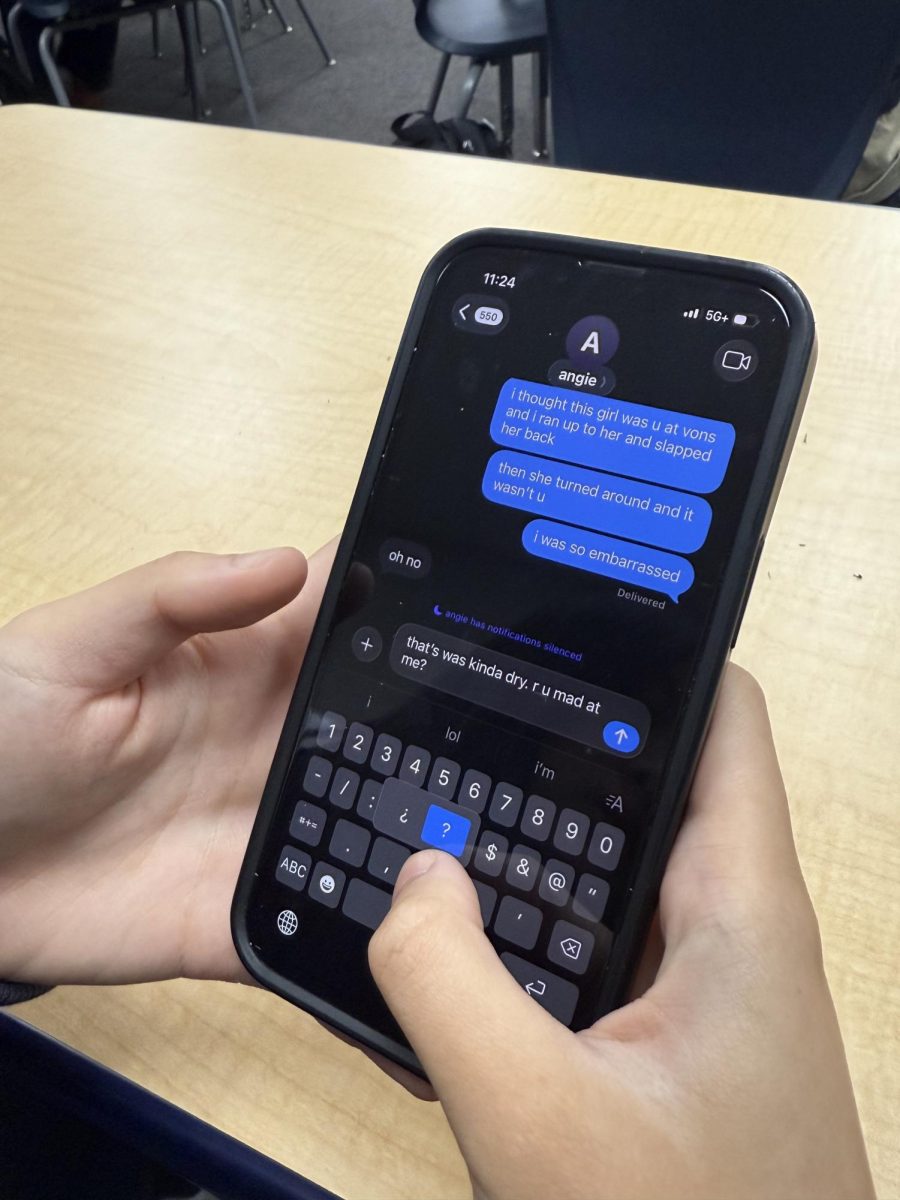

Another issue with this new feature is how the system relies on predictions. By tracking what videos you watch, machine learning algorithms infer your age. The potential consequences of this are that it could misclassify an adult who watches content catered towards younger demographics as a minor and identify minors who watch mature content as adults, thereby invalidating its intended purpose. Once flagged, the user must provide proper identification—government-issued ID or credit card—adding another layer of complexity to the issue.

However, the most prominent issue is not the age restriction, but the mass surveillance and data control that comes with this new addition. And it’s not just YouTube. Spotify, Reddit and Steam are all requesting some sort of age identification under the pretense of “protecting kids,” when the reality is that it normalizes tracking and limits digital freedom. Already, YouTube’s AI age check has been received with frustration, with a Change.org petition garnering over 128,000 signatures.

If the purpose of these companies is to promote safety for minors, AI surveillance is not the solution. Expanding these policies across services would create an environment where browsing is tightly monitored and autonomy is stripped with heavy censorship.