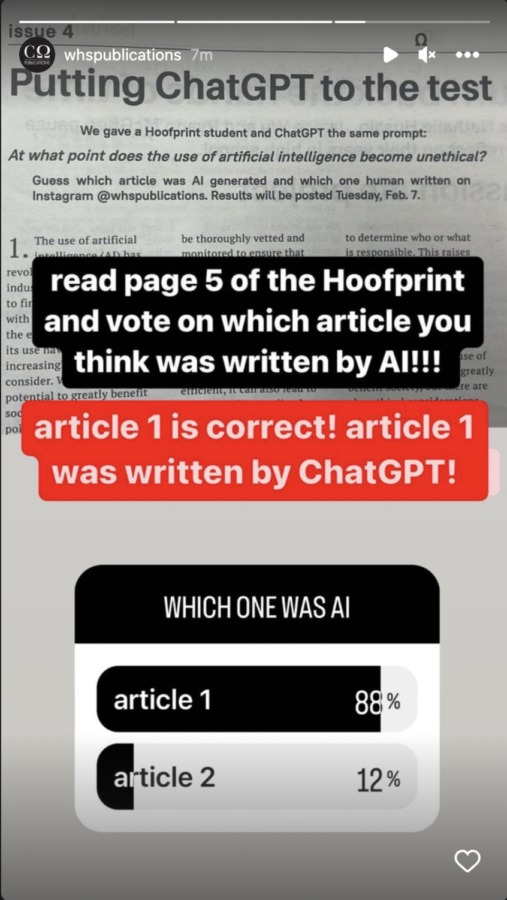

Putting ChatGPT to the test

We gave a Hoofprint student and ChatGPT the same prompt: At what point does the use of artificial intelligence become unethical? We had students guess on Instagram which article was student written and which one was AI-generated.

February 7, 2023

One:

The use of artificial intelligence (AI) has revolutionized various industries, from healthcare to finance. However, as with any new technology, the ethical implications of its use have become increasingly important to consider. While AI has the potential to greatly benefit society, there are certain points at which its use can cross ethical boundaries.

One issue with AI is its potential to perpetuate biases and discrimination. For example, if an AI system is trained on biased data, it may make decisions that unfairly target certain groups. This can have serious consequences, particularly in areas such as employment, housing, and criminal justice. To address this, it is crucial that the data used to train AI systems be thoroughly vetted and monitored to ensure that it is free from any biases or discrimination.

Another ethical concern with AI is its potential to replace human workers. While AI has the potential to automate many tasks and make businesses more efficient, it can also lead to job loss and inequality. In some cases, this can result in entire communities losing their livelihoods, exacerbating existing socio-economic problems. Therefore, it is essential to ensure that the implementation of AI is done in a responsible manner, taking into account its impact on the workforce.

Moreover, there is also a concern about the accountability of AI systems. In the event of a mistake or failure, it can be difficult to determine who or what is responsible. This raises questions about who should be held accountable for the actions of an AI system and what measures should be in place to prevent harm or damage.

In conclusion, the use of AI has the potential to greatly benefit society, but there are also ethical considerations that must be taken into account. As AI continues to evolve, it is important to have ongoing discussions and debates about the ethical implications of its use and to ensure that it is used in a responsible and ethical manner. This will ensure that AI is used for the greater good, rather than causing harm to society or perpetuating inequalities.

Two:

Artificial intelligence (AI) is unavoidable in today’s Digital Age, and many would argue that its advantages, mainly increased efficiency and reduced biases, outweigh its downsides. However, because AI intersects people-driven industries so closely, there is a thin line between making the most out of available technologies and letting it replace human spaces. Since ethics is defined as a set of moral principles that govern a person’s behavior, any AI that infringes on people’s humanity is unethical.

Firstly, one of the biggest concerns about AI is its potential to replace human jobs, especially in fields like manufacturing, data and customer service. This worry is far from unfounded; AI has already automated many previously labor-intensive processes since its inception. However, whereas only unskilled jobs seemed in danger a couple years ago, rapid advancement of technology has put all professions under threat. From lawyers to doctors, AI is encroaching on white collar jobs with high entry barriers. Even the creative field has been impacted with recent controversy surrounding AI-generated art. In short, though AI has streamlined previously repetitive tasks, it is also important to restrict the over-digitization of labor.

AI systems are also praised for their objectivity, but this fails to take into account the inherent biases of their human creators. This illusion of neutrality is another potential for concern. AI, though impartial by itself, is trained by people and data full of biases. It cannot make entirely fair decisions because its training was not based on entirely fair premises. Unfortunately, this bias often skews against already disadvantaged populations, bringing forth age-old discrimination in a new, digital form. Admittedly, progress has been made, but it takes time to combat centuries of prejudice. Thus, when training AI, engineers must be aware of the role subjectivity plays in human decision-making and its implications on new technology.

Technological developments are the foundation of modern society, but advances in AI can also feel like a constant balancing act between help and harm. The most important thing to keep in mind while evaluating its morality is that ultimately, AI is just a means to an end, a tool to aid humans in whichever avenue they choose.